Learning to render novel views from wide-baseline stereo pairs

This example is a visual walkthrough of the paper "Learning to render novel views from wide-baseline stereo pairs". All the visualizations were created by editing the original source code to log data with the Rerun SDK.

Visual paper walkthrough

Novel view synthesis has made remarkable progress in recent years, but most methods require per-scene optimization on many images. In their CVPR 2023 paper Yilun Du et al. propose a method that works with just 2 views. I created a visual walkthrough of the work using the Rerun SDK.

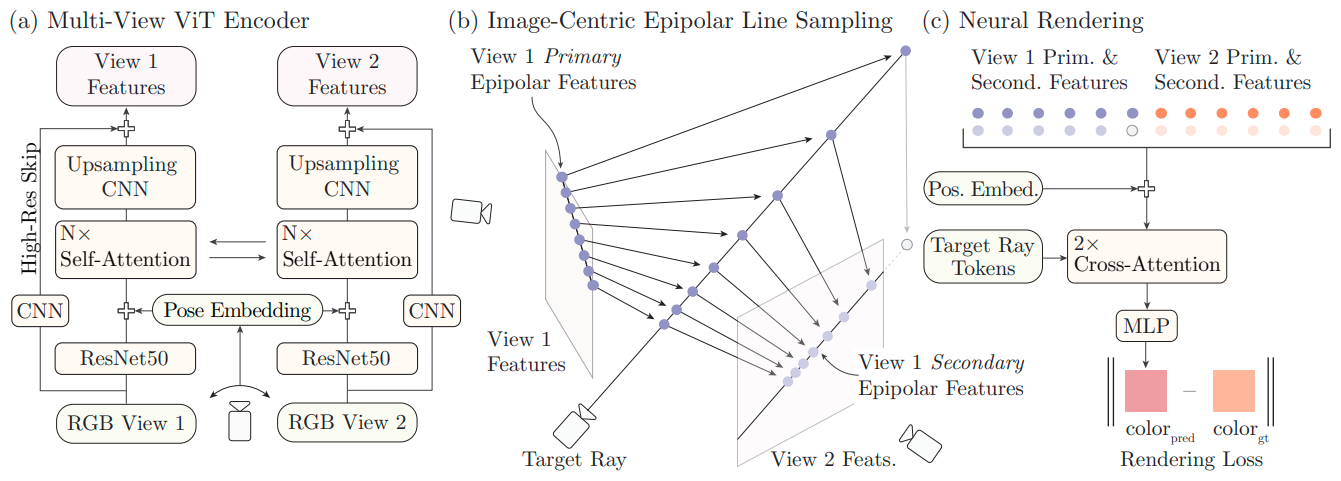

“Learning to Render Novel Views from Wide-Baseline Stereo Pairs” describes a three stage approach. (a) Image features for each input view are extracted. (b) Features along the target rays are collected. (c) The color is predicted through the use of cross-attention.

To render a pixel its corresponding ray is projected onto each input image. Instead of uniformly sampling along the ray in 3D, the samples are distributed such that they are equally spaced on the image plane. The same points are also projected onto the other view (light color).

The image features at these samples are used to synthesize new views. The method learns to attend to the features close to the surface. Here we show the attention maps for one pixel, and the resulting pseudo depth maps if we interpret the attention as a probability distribution.

Make sure to check out the paper by Yilun Du, Cameron Smith, Ayush Tewari, Vincent Sitzmann to learn about the details of the method.