ARKit scenes

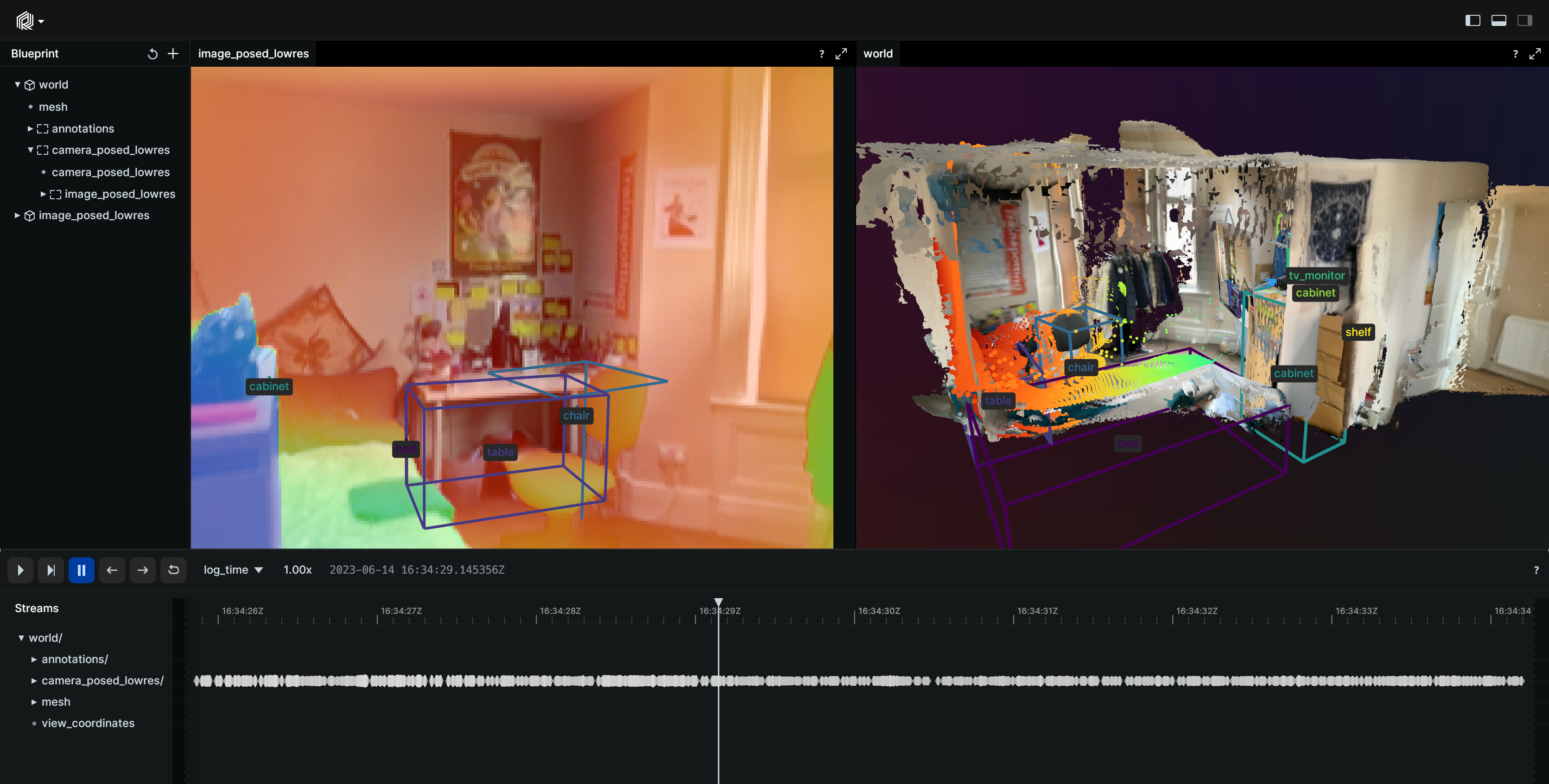

This example visualizes the ARKitScenes dataset using Rerun. The dataset contains color images, depth images, the reconstructed mesh, and labeled bounding boxes around furniture.

Used Rerun types

Image,

DepthImage, Transform3D,

Pinhole, Mesh3D,

Boxes3D,

TextDocument

Background

The ARKitScenes dataset, captured using Apple's ARKit technology, encompasses a diverse array of indoor scenes, offering color and depth images, reconstructed 3D meshes, and labeled bounding boxes around objects like furniture. It's a valuable resource for researchers and developers in computer vision and augmented reality, enabling advancements in object recognition, depth estimation, and spatial understanding.

Logging and visualizing with Rerun

This visualization through Rerun highlights the dataset's potential in developing immersive AR experiences and enhancing machine learning models for real-world applications while showcasing Reruns visualization capabilities.

Logging a moving RGB-D camera

To log a moving RGB-D camera, we log four key components: the camera's intrinsics via a pinhole camera model, its pose or extrinsics, along with the color and depth images. The camera intrinsics, which define the camera's lens properties, and the pose, detailing its position and orientation, are logged to create a comprehensive 3D to 2D mapping. Both the RGB and depth images are then logged as child entities, capturing the visual and depth aspects of the scene, respectively. This approach ensures a detailed recording of the camera's viewpoint and the scene it captures, all stored compactly under the same entity path for simplicity.

rr.log("world/camera_lowres", rr.Transform3D(transform=camera_from_world)) rr.log("world/camera_lowres", rr.Pinhole(image_from_camera=intrinsic, resolution=[w, h])) rr.log(f"{entity_id}/rgb", rr.Image(rgb).compress(jpeg_quality=95)) rr.log(f"{entity_id}/depth", rr.DepthImage(depth, meter=1000))

Ground-truth mesh

The mesh is logged as an rr.Mesh3D archetype. In this case the mesh is composed of mesh vertices, indices (i.e., which vertices belong to the same face), and vertex colors.

rr.log( "world/mesh", rr.Mesh3D( vertex_positions=mesh.vertices, vertex_colors=mesh.visual.vertex_colors, indices=mesh.faces, ), timeless=True, )

Here, the mesh is logged to the world/mesh entity and is marked as timeless, since it does not change in the context of this visualization.

Logging 3D bounding boxes

Here we loop through the data and add bounding boxes to all the items found.

for i, label_info in enumerate(annotation["data"]): rr.log( f"world/annotations/box-{uid}-{label}", rr.Boxes3D( half_sizes=half_size, centers=centroid, rotations=rr.Quaternion(xyzw=rot.as_quat()), labels=label, colors=colors[i], ), timeless=True, )

Setting up the default blueprint

This example benefits at lot from having a custom blueprint defined. This happens with the following code:

primary_camera_entity = HIGHRES_ENTITY_PATH if args.include_highres else LOWRES_POSED_ENTITY_PATH blueprint = rrb.Horizontal( rrb.Spatial3DView(name="3D"), rrb.Vertical( rrb.Tabs( rrb.Spatial2DView( name="RGB", origin=primary_camera_entity, contents=["$origin/rgb", "/world/annotations/**"], ), rrb.Spatial2DView( name="Depth", origin=primary_camera_entity, contents=["$origin/depth", "/world/annotations/**"], ), name="2D", ), rrb.TextDocumentView(name="Readme"), ), ) rr.script_setup(args, "rerun_example_arkit_scenes", default_blueprint=blueprint)

In particular, we want to reproject 3D annotations onto the 2D camera views. To configure such a view, two things are necessary:

- The view origin must be set to the entity that contains the pinhole transforms. In this example, the entity path is stored in the

primary_camera_entityvariable. - The view contents must explicitly include the annotations, which are not logged in the subtree defined by the origin. This is done using the

contentsargument, here set to["$origin/depth", "/world/annotations/**"].

Run the code

To run this example, make sure you have the Rerun repository checked out and the latest SDK installed:

# Setup pip install --upgrade rerun-sdk # install the latest Rerun SDK git clone git@github.com:rerun-io/rerun.git # Clone the repository cd rerun git checkout latest # Check out the commit matching the latest SDK release

Install the necessary libraries specified in the requirements file:

pip install -e examples/python/arkit_scenes

To run this example use

python -m airkit_scenes